回调:观察、自定义和控制智能体行为¶

回调是 ADK 的核心功能,提供了一种强大的机制来挂钩智能体的执行过程。它们允许你在特定的预定义点观察、自定义甚至控制智能体的行为,而无需修改 ADK 框架的核心代码。

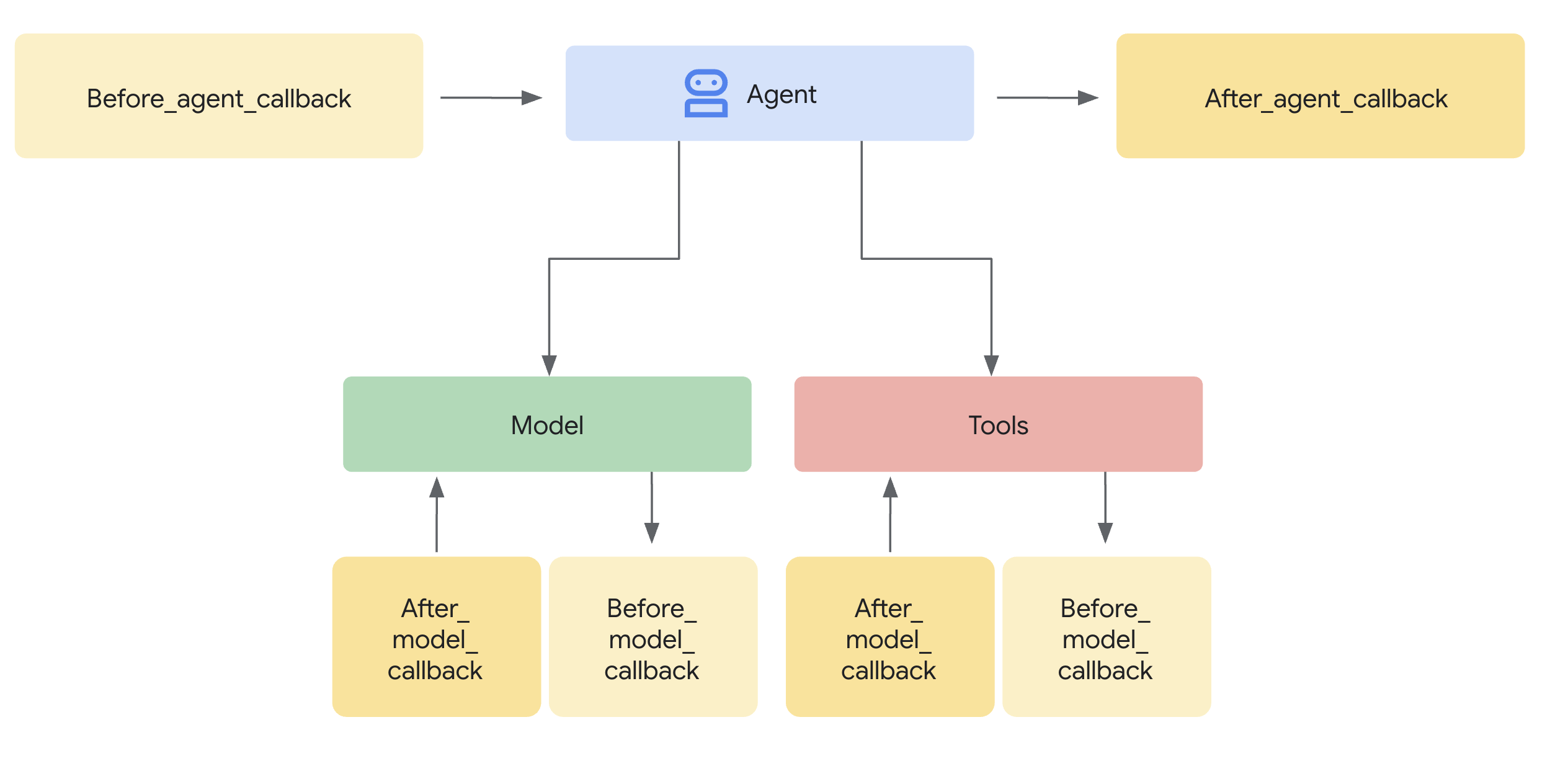

它们是什么? 本质上,回调是你定义的标准函数。然后在创建智能体时,你将这些函数与智能体关联。ADK 框架在关键阶段自动调用你的函数,让你观察或干预。将其想象为智能体过程中的检查点:

- 在智能体开始处理请求的主要工作之前,以及完成之后: 当你要求智能体做某事时(例如,回答问题),它会运行其内部逻辑来推断响应。

智能体前置回调在特定请求的主要工作开始之前执行。智能体后置回调在智能体完成该请求的所有步骤并准备好最终结果之后执行,但就在结果返回之前。- 这种"主要工作"包括智能体处理该单个请求的整个过程。这可能涉及决定调用 LLM、实际调用 LLM、决定使用工具、使用工具、处理结果,最后整理答案。这些回调本质上包装了从接收输入到为该次交互产生最终输出的整个序列。

- 在向大型语言模型 (LLM) 发送请求之前,或从 LLM 接收响应之后: 这些回调(

模型前置、模型后置)允许你检查或修改专门进出 LLM 的数据。 - 在执行工具(如 Python 函数或另一个智能体)之前或完成之后: 类似地,

工具前置和工具后置回调为你提供了专门围绕智能体调用的工具执行的控制点。

为什么使用它们? 回调解锁了显著的灵活性并支持高级智能体功能:

- 观察与调试: 在关键步骤记录详细信息,用于监控和故障排除。

- 自定义与控制: 根据你的逻辑修改流经智能体的数据(如 LLM 请求或工具结果),甚至完全跳过某些步骤。

- 实现防护机制: 强制执行安全规则,验证输入/输出,或阻止不允许的操作。

- 管理状态: 在执行期间读取或动态更新智能体的会话状态。

- 集成与增强: 触发外部操作(API 调用、通知)或添加缓存等功能。

Tip

在实现安全防护措施和策略时,使用 ADK 插件以获得比回调更好的模块化和灵活性。有关更多详细信息,请参阅 安全防护措施的回调和插件。

如何添加它们:

代码

from google.adk.agents import LlmAgent

from google.adk.agents.callback_context import CallbackContext

from google.adk.models import LlmResponse, LlmRequest

from typing import Optional

# --- Define your callback function ---

def my_before_model_logic(

callback_context: CallbackContext, llm_request: LlmRequest

) -> Optional[LlmResponse]:

print(f"Callback running before model call for agent: {callback_context.agent_name}")

# ... your custom logic here ...

return None # Allow the model call to proceed

# --- Register it during Agent creation ---

my_agent = LlmAgent(

name="MyCallbackAgent",

model="gemini-2.0-flash", # Or your desired model

instruction="Be helpful.",

# Other agent parameters...

before_model_callback=my_before_model_logic # Pass the function here

)

import {

LlmAgent,

InMemoryRunner,

CallbackContext,

LlmRequest,

LlmResponse,

Event,

isFinalResponse,

} from "@google/adk";

import { createUserContent } from "@google/genai";

import type { Content } from "@google/genai";

const MODEL_NAME = "gemini-2.5-flash";

const APP_NAME = "basic_callback_app";

const USER_ID = "test_user_basic";

const SESSION_ID = "session_basic_001";

// --- Define your callback function ---

function myBeforeModelLogic({

context,

request,

}: {

context: CallbackContext;

request: LlmRequest;

}): LlmResponse | undefined {

console.log(

`Callback running before model call for agent: ${context.agentName}`

);

// ... your custom logic here ...

return undefined; // Allow the model call to proceed

}

// --- Register it during Agent creation ---

const myAgent = new LlmAgent({

name: "MyCallbackAgent",

model: MODEL_NAME,

instruction: "Be helpful.",

beforeModelCallback: myBeforeModelLogic,

});

package main

import (

"context"

"fmt"

"log"

"strings"

"google.golang.org/adk/agent"

"google.golang.org/adk/agent/llmagent"

"google.golang.org/adk/model"

"google.golang.org/adk/model/gemini"

"google.golang.org/adk/runner"

"google.golang.org/adk/session"

"google.golang.org/genai"

)

// onBeforeModel is a callback function that gets triggered before an LLM call.

func onBeforeModel(ctx agent.CallbackContext, req *model.LLMRequest) (*model.LLMResponse, error) {

log.Println("--- onBeforeModel Callback Triggered ---")

log.Printf("Model Request to be sent: %v\n", req)

// Returning nil allows the default LLM call to proceed.

return nil, nil

}

func runBasicExample() {

const (

appName = "CallbackBasicApp"

userID = "test_user_123"

)

ctx := context.Background()

geminiModel, err := gemini.NewModel(ctx, modelName, &genai.ClientConfig{})

if err != nil {

log.Fatalf("Failed to create model: %v", err)

}

// Register the callback function in the agent configuration.

agentCfg := llmagent.Config{

Name: "SimpleAgent",

Model: geminiModel,

BeforeModelCallbacks: []llmagent.BeforeModelCallback{onBeforeModel},

}

simpleAgent, err := llmagent.New(agentCfg)

if err != nil {

log.Fatalf("Failed to create agent: %v", err)

}

sessionService := session.InMemoryService()

r, err := runner.New(runner.Config{

AppName: appName,

Agent: simpleAgent,

SessionService: sessionService,

})

if err != nil {

log.Fatalf("Failed to create runner: %v", err)

}

import com.google.adk.agents.CallbackContext;

import com.google.adk.agents.Callbacks;

import com.google.adk.agents.LlmAgent;

import com.google.adk.models.LlmRequest;

import java.util.Optional;

public class AgentWithBeforeModelCallback {

public static void main(String[] args) {

// --- Define your callback logic ---

Callbacks.BeforeModelCallbackSync myBeforeModelLogic =

(CallbackContext callbackContext, LlmRequest llmRequest) -> {

System.out.println(

"Callback running before model call for agent: " + callbackContext.agentName());

// ... your custom logic here ...

// Return Optional.empty() to allow the model call to proceed,

// similar to returning None in the Python example.

// If you wanted to return a response and skip the model call,

// you would return Optional.of(yourLlmResponse).

return Optional.empty();

};

// --- Register it during Agent creation ---

LlmAgent myAgent =

LlmAgent.builder()

.name("MyCallbackAgent")

.model("gemini-2.0-flash") // Or your desired model

.instruction("Be helpful.")

// Other agent parameters...

.beforeModelCallbackSync(myBeforeModelLogic) // Pass the callback implementation here

.build();

}

}

回调机制:拦截与控制¶

当 ADK 框架遇到可以运行回调的点(例如,就在调用 LLM 之前)时,它会检查你是否为该智能体提供了相应的回调函数。如果提供了,框架会执行你的函数。

上下文至关重要: 你的回调函数不是孤立调用的。框架提供特殊的上下文对象(CallbackContext 或 ToolContext)作为参数。这些对象包含关于智能体执行当前状态的重要信息,包括调用详情、会话状态,以及可能对服务(如 artifacts 或 memory)的引用。你使用这些上下文对象来了解情况并与框架交互。(详见"上下文对象"专门章节)。

控制流程(核心机制): 回调最强大的方面在于其返回值如何影响智能体后续的操作。这就是你拦截和控制执行流程的方式:

-

return None(允许默认行为):- 具体的返回类型可能因语言而异。在 Java 中,等效的返回类型是

Optional.empty()。请参阅 API 文档以获取特定语言的指导。 - 这是信号你的回调已完成其工作(例如,日志记录、检查、对可变输入参数如

llm_request的轻微修改)的标准方式,并且 ADK 智能体应该继续其正常操作。 - 对于

before_*回调(before_agent、before_model、before_tool),返回None意味着序列中的下一步(运行智能体逻辑、调用 LLM、执行工具)将会发生。 - 对于

after_*回调(after_agent、after_model、after_tool),返回None意味着前一步刚刚产生的结果(智能体的输出、LLM 的响应、工具的结果)将被原样使用。

- 具体的返回类型可能因语言而异。在 Java 中,等效的返回类型是

-

return <特定对象>(覆盖默认行为):- 返回特定类型的对象(而不是

None)是你覆盖 ADK 智能体默认行为的方式。框架将使用你返回的对象,并跳过通常会跟随的步骤或替换刚刚生成的结果。 before_agent_callback→types.Content:跳过智能体的主要执行逻辑(_run_async_impl/_run_live_impl)。返回的Content对象立即被视为智能体本轮次的最终输出。适用于直接处理简单请求或实施访问控制。before_model_callback→LlmResponse:跳过对外部大型语言模型的调用。返回的LlmResponse对象被处理,就好像它是来自 LLM 的实际响应一样。适用于实现输入防护措施、提示验证或提供缓存响应。before_tool_callback→dict或Map:跳过实际工具函数(或子智能体)的执行。返回的dict被用作工具调用的结果,然后通常传递回 LLM。适用于验证工具参数、应用策略限制或返回模拟/缓存的工具结果。after_agent_callback→types.Content:替换智能体运行逻辑刚刚产生的Content。after_model_callback→LlmResponse:替换从 LLM 接收的LlmResponse。适用于清理输出、添加标准免责声明或修改 LLM 的响应结构。after_tool_callback→dict或Map:替换工具返回的dict结果。允许在工具输出发送回 LLM 之前进行后处理或标准化。

- 返回特定类型的对象(而不是

概念代码示例(防护机制):

此示例演示了使用 before_model_callback 实现防护机制的常见模式。

Code

# Copyright 2025 Google LLC

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

from google.adk.agents import LlmAgent

from google.adk.agents.callback_context import CallbackContext

from google.adk.models import LlmResponse, LlmRequest

from google.adk.runners import Runner

from typing import Optional

from google.genai import types

from google.adk.sessions import InMemorySessionService

GEMINI_2_FLASH="gemini-2.0-flash"

# --- Define the Callback Function ---

def simple_before_model_modifier(

callback_context: CallbackContext, llm_request: LlmRequest

) -> Optional[LlmResponse]:

"""Inspects/modifies the LLM request or skips the call."""

agent_name = callback_context.agent_name

print(f"[Callback] Before model call for agent: {agent_name}")

# Inspect the last user message in the request contents

last_user_message = ""

if llm_request.contents and llm_request.contents[-1].role == 'user':

if llm_request.contents[-1].parts:

last_user_message = llm_request.contents[-1].parts[0].text

print(f"[Callback] Inspecting last user message: '{last_user_message}'")

# --- Modification Example ---

# Add a prefix to the system instruction

original_instruction = llm_request.config.system_instruction or types.Content(role="system", parts=[])

prefix = "[Modified by Callback] "

# Ensure system_instruction is Content and parts list exists

if not isinstance(original_instruction, types.Content):

# Handle case where it might be a string (though config expects Content)

original_instruction = types.Content(role="system", parts=[types.Part(text=str(original_instruction))])

if not original_instruction.parts:

original_instruction.parts.append(types.Part(text="")) # Add an empty part if none exist

# Modify the text of the first part

modified_text = prefix + (original_instruction.parts[0].text or "")

original_instruction.parts[0].text = modified_text

llm_request.config.system_instruction = original_instruction

print(f"[Callback] Modified system instruction to: '{modified_text}'")

# --- Skip Example ---

# Check if the last user message contains "BLOCK"

if "BLOCK" in last_user_message.upper():

print("[Callback] 'BLOCK' keyword found. Skipping LLM call.")

# Return an LlmResponse to skip the actual LLM call

return LlmResponse(

content=types.Content(

role="model",

parts=[types.Part(text="LLM call was blocked by before_model_callback.")],

)

)

else:

print("[Callback] Proceeding with LLM call.")

# Return None to allow the (modified) request to go to the LLM

return None

# Create LlmAgent and Assign Callback

my_llm_agent = LlmAgent(

name="ModelCallbackAgent",

model=GEMINI_2_FLASH,

instruction="You are a helpful assistant.", # Base instruction

description="An LLM agent demonstrating before_model_callback",

before_model_callback=simple_before_model_modifier # Assign the function here

)

APP_NAME = "guardrail_app"

USER_ID = "user_1"

SESSION_ID = "session_001"

# Session and Runner

async def setup_session_and_runner():

session_service = InMemorySessionService()

session = await session_service.create_session(app_name=APP_NAME, user_id=USER_ID, session_id=SESSION_ID)

runner = Runner(agent=my_llm_agent, app_name=APP_NAME, session_service=session_service)

return session, runner

# Agent Interaction

async def call_agent_async(query):

content = types.Content(role='user', parts=[types.Part(text=query)])

session, runner = await setup_session_and_runner()

events = runner.run_async(user_id=USER_ID, session_id=SESSION_ID, new_message=content)

async for event in events:

if event.is_final_response():

final_response = event.content.parts[0].text

print("Agent Response: ", final_response)

# Note: In Colab, you can directly use 'await' at the top level.

# If running this code as a standalone Python script, you'll need to use asyncio.run() or manage the event loop.

await call_agent_async("write a joke on BLOCK")

/**

* Copyright 2025 Google LLC

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import {

LlmAgent,

InMemoryRunner,

CallbackContext,

isFinalResponse,

} from "@google/adk";

import { createUserContent } from "@google/genai";

const MODEL_NAME = "gemini-2.5-flash";

const APP_NAME = "before_model_callback_app";

const USER_ID = "test_user_before_model";

const SESSION_ID_BLOCK = "session_block_model_call";

const SESSION_ID_NORMAL = "session_normal_model_call";

// --- Define the Callback Function ---

function simpleBeforeModelModifier({

context,

request,

}: {

context: CallbackContext;

request: any;

}): any | undefined {

console.log(`[Callback] Before model call for agent: ${context.agentName}`);

// Inspect the last user message in the request contents

const lastUserMessage = request.contents?.at(-1)?.parts?.[0]?.text ?? "";

console.log(`[Callback] Inspecting last user message: '${lastUserMessage}'`);

// --- Modification Example ---

// Add a prefix to the system instruction.

// We create a deep copy to avoid modifying the original agent's config object.

const modifiedConfig = JSON.parse(JSON.stringify(request.config));

const originalInstructionText =

modifiedConfig.systemInstruction?.parts?.[0]?.text ?? "";

const prefix = "[Modified by Callback] ";

modifiedConfig.systemInstruction = {

role: "system",

parts: [{ text: prefix + originalInstructionText }],

};

request.config = modifiedConfig; // Assign the modified config back to the request

console.log(

`[Callback] Modified system instruction to: '${modifiedConfig.systemInstruction.parts[0].text}'`

);

// --- Skip Example ---

// Check if the last user message contains "BLOCK"

if (lastUserMessage.toUpperCase().includes("BLOCK")) {

console.log("[Callback] 'BLOCK' keyword found. Skipping LLM call.");

// Return an LlmResponse to skip the actual LLM call

return {

content: {

role: "model",

parts: [

{ text: "LLM call was blocked by the before_model_callback." },

],

},

};

}

console.log("[Callback] Proceeding with LLM call.");

// Return undefined to allow the (modified) request to go to the LLM

return undefined;

}

// --- Create LlmAgent and Assign Callback ---

const myLlmAgent = new LlmAgent({

name: "ModelCallbackAgent",

model: MODEL_NAME,

instruction: "You are a helpful assistant.", // Base instruction

description: "An LLM agent demonstrating before_model_callback",

beforeModelCallback: simpleBeforeModelModifier, // Assign the function here

});

// --- Agent Interaction Logic ---

async function callAgentAndPrint(

runner: InMemoryRunner,

query: string,

sessionId: string

) {

console.log(`\n>>> Calling Agent with query: "${query}"`);

let finalResponseContent = "No final response received.";

const events = runner.runAsync({ userId: USER_ID, sessionId, newMessage: createUserContent(query) });

for await (const event of events) {

if (isFinalResponse(event) && event.content?.parts?.length) {

finalResponseContent = event.content.parts

.map((part: { text?: string }) => part.text ?? "")

.join("");

}

}

console.log("<<< Agent Response: ", finalResponseContent);

}

// --- Run Interactions ---

async function main() {

const runner = new InMemoryRunner({ agent: myLlmAgent, appName: APP_NAME });

// Scenario 1: The callback will find "BLOCK" and skip the model call

await runner.sessionService.createSession({

appName: APP_NAME,

userId: USER_ID,

sessionId: SESSION_ID_BLOCK,

});

await callAgentAndPrint(

runner,

"write a joke about BLOCK",

SESSION_ID_BLOCK

);

// Scenario 2: The callback will modify the instruction and proceed

await runner.sessionService.createSession({

appName: APP_NAME,

userId: USER_ID,

sessionId: SESSION_ID_NORMAL,

});

await callAgentAndPrint(runner, "write a short poem", SESSION_ID_NORMAL);

}

main();

package main

import (

"context"

"fmt"

"log"

"strings"

"google.golang.org/adk/agent"

"google.golang.org/adk/agent/llmagent"

"google.golang.org/adk/model"

"google.golang.org/adk/model/gemini"

"google.golang.org/adk/runner"

"google.golang.org/adk/session"

"google.golang.org/genai"

)

// onBeforeModelGuardrail is a callback that inspects the LLM request.

// If it contains a forbidden topic, it blocks the request and returns a

// predefined response. Otherwise, it allows the request to proceed.

func onBeforeModelGuardrail(ctx agent.CallbackContext, req *model.LLMRequest) (*model.LLMResponse, error) {

log.Println("--- onBeforeModelGuardrail Callback Triggered ---")

// Inspect the request content for forbidden topics.

for _, content := range req.Contents {

for _, part := range content.Parts {

if strings.Contains(part.Text, "finance") {

log.Println("Forbidden topic 'finance' detected. Blocking LLM call.")

// By returning a non-nil response, we override the default behavior

// and prevent the actual LLM call.

return &model.LLMResponse{

Content: &genai.Content{

Parts: []*genai.Part{{Text: "I'm sorry, but I cannot discuss financial topics."}},

Role: "model",

},

}, nil

}

}

}

log.Println("No forbidden topics found. Allowing LLM call to proceed.")

// Returning nil allows the default LLM call to proceed.

return nil, nil

}

func runGuardrailExample() {

const (

appName = "GuardrailApp"

userID = "test_user_456"

)

ctx := context.Background()

geminiModel, err := gemini.NewModel(ctx, modelName, &genai.ClientConfig{})

if err != nil {

log.Fatalf("Failed to create model: %v", err)

}

agentCfg := llmagent.Config{

Name: "ChatAgent",

Model: geminiModel,

BeforeModelCallbacks: []llmagent.BeforeModelCallback{onBeforeModelGuardrail},

}

chatAgent, err := llmagent.New(agentCfg)

if err != nil {

log.Fatalf("Failed to create agent: %v", err)

}

sessionService := session.InMemoryService()

r, err := runner.New(runner.Config{

AppName: appName,

Agent: chatAgent,

SessionService: sessionService,

})

if err != nil {

log.Fatalf("Failed to create runner: %v", err)

}

import com.google.adk.agents.CallbackContext;

import com.google.adk.agents.LlmAgent;

import com.google.adk.events.Event;

import com.google.adk.models.LlmRequest;

import com.google.adk.models.LlmResponse;

import com.google.adk.runner.InMemoryRunner;

import com.google.adk.sessions.Session;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.Part;

import io.reactivex.rxjava3.core.Flowable;

import java.util.ArrayList;

import java.util.List;

import java.util.Optional;

import java.util.stream.Collectors;

public class BeforeModelGuardrailExample {

private static final String MODEL_ID = "gemini-2.0-flash";

private static final String APP_NAME = "guardrail_app";

private static final String USER_ID = "user_1";

public static void main(String[] args) {

BeforeModelGuardrailExample example = new BeforeModelGuardrailExample();

example.defineAgentAndRun("Tell me about quantum computing. This is a test.");

}

// --- Define your callback logic ---

// Looks for the word "BLOCK" in the user prompt and blocks the call to LLM if found.

// Otherwise the LLM call proceeds as usual.

public Optional<LlmResponse> simpleBeforeModelModifier(

CallbackContext callbackContext, LlmRequest llmRequest) {

System.out.println("[Callback] Before model call for agent: " + callbackContext.agentName());

// Inspect the last user message in the request contents

String lastUserMessageText = "";

List<Content> requestContents = llmRequest.contents();

if (requestContents != null && !requestContents.isEmpty()) {

Content lastContent = requestContents.get(requestContents.size() - 1);

if (lastContent.role().isPresent() && "user".equals(lastContent.role().get())) {

lastUserMessageText =

lastContent.parts().orElse(List.of()).stream()

.flatMap(part -> part.text().stream())

.collect(Collectors.joining(" ")); // Concatenate text from all parts

}

}

System.out.println("[Callback] Inspecting last user message: '" + lastUserMessageText + "'");

String prefix = "[Modified by Callback] ";

GenerateContentConfig currentConfig =

llmRequest.config().orElse(GenerateContentConfig.builder().build());

Optional<Content> optOriginalSystemInstruction = currentConfig.systemInstruction();

Content conceptualModifiedSystemInstruction;

if (optOriginalSystemInstruction.isPresent()) {

Content originalSystemInstruction = optOriginalSystemInstruction.get();

List<Part> originalParts =

new ArrayList<>(originalSystemInstruction.parts().orElse(List.of()));

String originalText = "";

if (!originalParts.isEmpty()) {

Part firstPart = originalParts.get(0);

if (firstPart.text().isPresent()) {

originalText = firstPart.text().get();

}

originalParts.set(0, Part.fromText(prefix + originalText));

} else {

originalParts.add(Part.fromText(prefix));

}

conceptualModifiedSystemInstruction =

originalSystemInstruction.toBuilder().parts(originalParts).build();

} else {

conceptualModifiedSystemInstruction =

Content.builder()

.role("system")

.parts(List.of(Part.fromText(prefix)))

.build();

}

// This demonstrates building a new LlmRequest with the modified config.

llmRequest =

llmRequest.toBuilder()

.config(

currentConfig.toBuilder()

.systemInstruction(conceptualModifiedSystemInstruction)

.build())

.build();

System.out.println(

"[Callback] Conceptually modified system instruction is: '"

+ llmRequest.config().get().systemInstruction().get().parts().get().get(0).text().get());

// --- Skip Example ---

// Check if the last user message contains "BLOCK"

if (lastUserMessageText.toUpperCase().contains("BLOCK")) {

System.out.println("[Callback] 'BLOCK' keyword found. Skipping LLM call.");

LlmResponse skipResponse =

LlmResponse.builder()

.content(

Content.builder()

.role("model")

.parts(

List.of(

Part.builder()

.text("LLM call was blocked by before_model_callback.")

.build()))

.build())

.build();

return Optional.of(skipResponse);

}

System.out.println("[Callback] Proceeding with LLM call.");

// Return Optional.empty() to allow the (modified) request to go to the LLM

return Optional.empty();

}

public void defineAgentAndRun(String prompt) {

// --- Create LlmAgent and Assign Callback ---

LlmAgent myLlmAgent =

LlmAgent.builder()

.name("ModelCallbackAgent")

.model(MODEL_ID)

.instruction("You are a helpful assistant.") // Base instruction

.description("An LLM agent demonstrating before_model_callback")

.beforeModelCallbackSync(this::simpleBeforeModelModifier) // Assign the callback here

.build();

// Session and Runner

InMemoryRunner runner = new InMemoryRunner(myLlmAgent, APP_NAME);

// InMemoryRunner automatically creates a session service. Create a session using the service

Session session = runner.sessionService().createSession(APP_NAME, USER_ID).blockingGet();

Content userMessage =

Content.fromParts(Part.fromText(prompt));

// Run the agent

Flowable<Event> eventStream = runner.runAsync(USER_ID, session.id(), userMessage);

// Stream event response

eventStream.blockingForEach(

event -> {

if (event.finalResponse()) {

System.out.println(event.stringifyContent());

}

});

}

}

通过理解这种返回 None 与返回特定对象的机制,你可以精确控制智能体的执行路径,使回调成为使用 ADK 构建复杂可靠智能体的重要工具。